Bayesian Neural Networks

Contents

Bayesian Neural Networks#

Bayesian Neural Networks are quite a new concept in machine learning. Therefore in this tutorial an effort is made to understand what are Bayesian Networks (BNNs) and how they can be used to model uncertainties.

What is a BNN?#

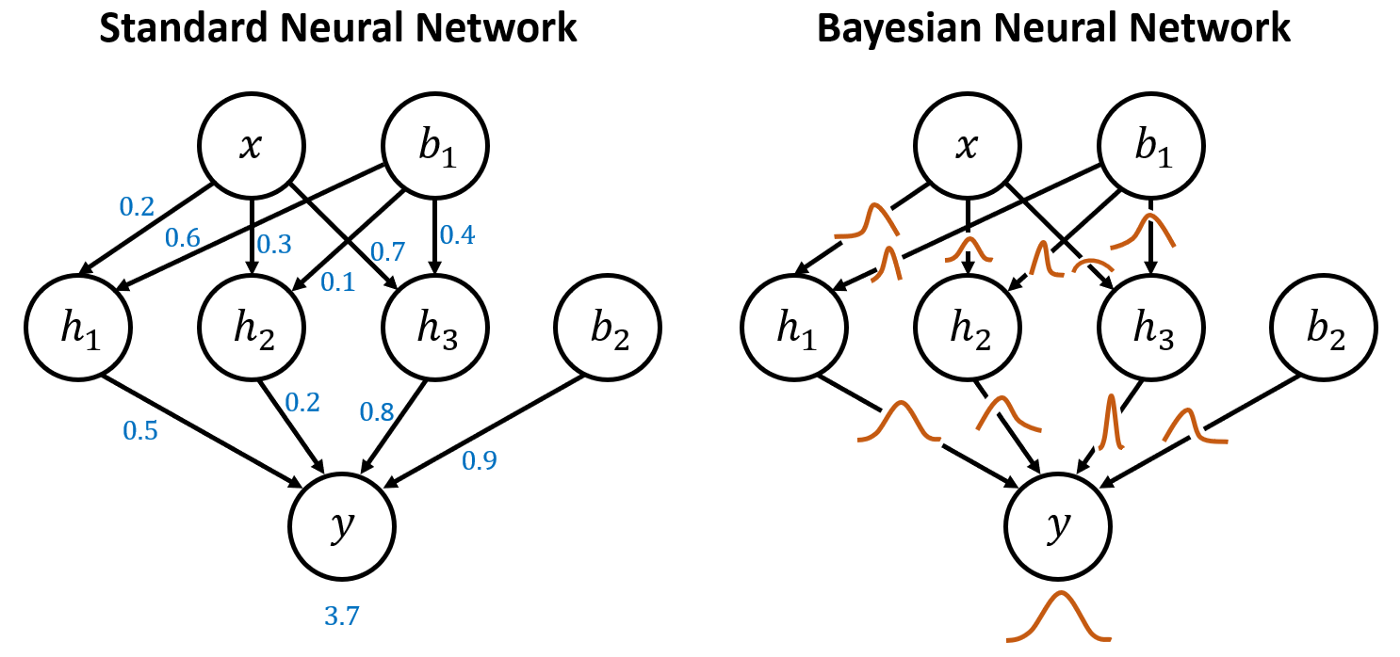

Basically a BNN is a machine learning method that combines neural networks with Bayesian inference. The Bayesian approach is applied to all trainable (weights, bias etc.) variables of the network available, which means that the BNN will try and find their marginal distribution. The purpose of a BNN is to quantify uncertainties that are in the model with the help of weights and outputs.

In the following figure the essential difference between a simple Neural Network and a BNN is shown. While in the simple NN the connections after training are represented by a single value, in the BNN these are represented by distributions.

Model example#

First all the required packages should be imported.

from datafusiontools._core.data_input import Data, Variable, Geometry

from datafusiontools._core.utils import CreateInputsML, AggregateMethod

import datafusiontools.machine_learning.enumeration_classes as class_enums

from datafusiontools.machine_learning.bayesian_neural_network import BayesianNeuralNetwork

import pickle

from pathlib import Path

After the required packages are imported the user can use the function get_input_data found in the following snippet.

The data were stored in a pickle file.

def get_input_data():

input_files = "test_case_DF.pickle"

with open(input_files, "rb") as f:

(cpts, resistivity, insar) = pickle.load(f)

# create List[Data]

cpts_list = []

for name, item in cpts.items():

location = Geometry(x=item["coordinates"][0], y=item["coordinates"][1], z=0)

cpts_list.append(

Data(

location=location,

independent_variable=Variable(value=item["NAP"], label="NAP"),

variables=[

Variable(value=item["water"], label="water"),

Variable(value=item["tip"], label="tip"),

Variable(value=item["IC"], label="IC"),

Variable(value=item["friction"], label="friction"),

],

)

)

resistivity_list = []

for name, item in resistivity.items():

location = Geometry(x=item["coordinates"][0], y=item["coordinates"][1], z=0)

resistivity_list.append(

Data(

location=location,

independent_variable=Variable(value=item["NAP"], label="NAP"),

variables=[

Variable(value=item["resistivity"], label="resistivity")

],

)

)

insar_list = []

for counter, coordinates in enumerate(insar["coordinates"]):

location = Geometry(x=coordinates[0], y=coordinates[1], z=0)

insar_list.append(

Data(

location=location,

independent_variable=Variable(value=insar["time"], label="time"),

variables=[

Variable(

value=insar["displacement"][counter], label="displacement"

)

],

)

)

create_features = CreateInputsML()

aggregated_features = create_features.find_closer_points(

input_data=cpts_list,

combined_data=insar_list,

aggregate_method=AggregateMethod.SUM,

interpolate_on_independent_variable=False,

aggregate_variable="displacement",

number_of_points=10,

)

aggregated_features = create_features.find_closer_points(

input_data=aggregated_features,

combined_data=resistivity_list,

aggregate_method=AggregateMethod.MEAN,

aggregate_variable="resistivity",

interpolate_on_independent_variable=True,

number_of_points=10,

)

assert len(aggregated_features) != 0

for aggregated_feature in aggregated_features:

create_features.add_features(

aggregated_feature,

["tip", "displacement", "resistivity"],

use_independent_variable=True,

use_location_as_input=(True, True, False),

)

create_features.add_targets(aggregated_feature, ["IC"])

create_features.split_train_test_data()

training_data = create_features.get_features_train(flatten=False)

target_data = create_features.get_targets_train(flatten=False)

validation_training = create_features.get_features_test(flatten=False)

validation_target = create_features.get_targets_test(flatten=False)

return training_data, target_data, validation_training, validation_target, create_features.get_feature_names()

The datafusiontools.machine_learning.bayesian_neural_network.BayesianNeuralNetwork class

can be initialized for the BNN to be trained. This can be seen in the snippet that follows.

# extract inputs

training_data, target_data, validation_training, validation_target, feature_names = get_input_data()

# initialize model

nn = BayesianNeuralNetwork(

classification=False,

nb_hidden_layers=2,

nb_neurons=[8, 8],

activation_fct=class_enums.ActivationFunctions.sigmoid,

optimizer=class_enums.Optimizer.Adadelta,

epochs=300,

batch=24,

feature_names=feature_names,

learning_rate = 0.0001,

validation_features=validation_training,

validation_targets=validation_target,

)

# Train model

nn.train(

training_data,

target_data,

)

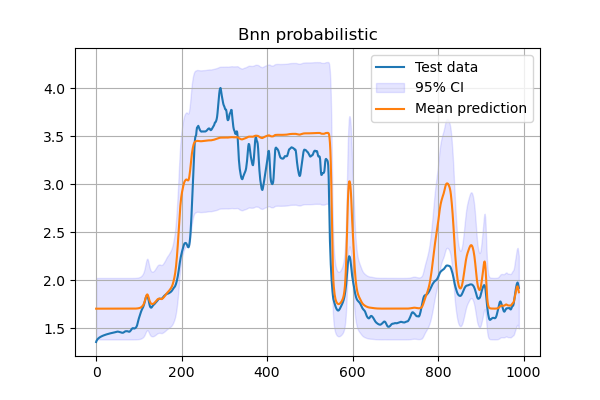

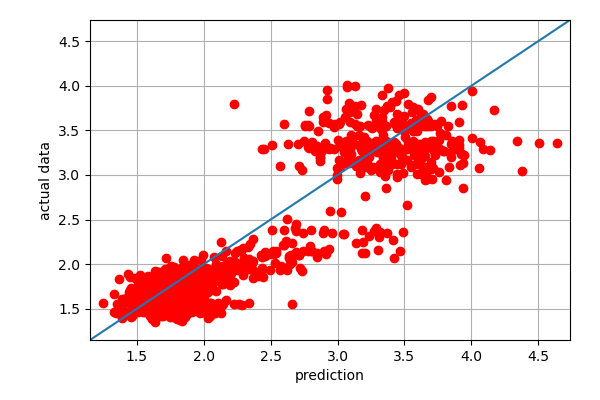

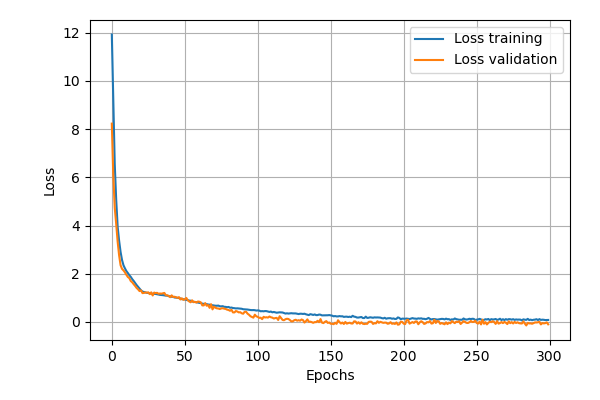

After training the results of the process can be extracted by using functions embedded in the DataFusionTools package.

nn.predict(validation_training)

nn.plot_cost_function(output_folder=Path("./test_bnn"))

nn.plot_confidence_band(validation_target, output_folder=Path("./test_bnn"))

nn.plot_fitted_line(validation_target, output_folder=Path("./test_bnn"))

Which produce the following graphs